Think of software engineering best practices not as a rigid rulebook, but as a strategic playbook for building great digital products. Following this playbook is what separates successful tech companies from the rest, helping them sidestep technical debt, scale smoothly, and ultimately drive business growth. Building Your Foundation for Engineering Excellence You wouldn't build a […]

Think of software engineering best practices not as a rigid rulebook, but as a strategic playbook for building great digital products. Following this playbook is what separates successful tech companies from the rest, helping them sidestep technical debt, scale smoothly, and ultimately drive business growth.

You wouldn't build a skyscraper without an architectural plan, a solid foundation, or a skilled construction crew, right? Building software without established best practices is the digital version of that same mistake. It might feel like you're moving faster at first, but you're really just setting yourself up for instability, expensive rework, and a product that can't handle future growth.

These practices are what elevate software development from a chaotic art form into a disciplined engineering field. They give your team a shared language and a reliable framework to deliver consistent, predictable results. This guide is your roadmap, designed for CTOs, engineering managers, and even non-technical founders who need to understand what makes a development team tick.

Creating a culture of engineering excellence means paying close attention to four distinct areas. If you neglect one, the others will eventually suffer—just like a table with one leg shorter than the others.

Code: This is the most tangible output of your developers. It’s all about writing code that is clean, maintainable, and well-tested, making it easy for anyone on the team to understand and build upon.

Processes: These are the established workflows your team follows to work together. Solid processes bring consistency and alignment to everything you do, from the first planning session to the final deployment. You can learn more by exploring the different roles in agile software development that bring these processes to life.

Infrastructure: Your application doesn't exist in a vacuum; it runs on underlying systems. A robust infrastructure is what guarantees your software is scalable, secure, and reliable for your users.

People: At the end of the day, people build software. This pillar is about creating a culture of continuous learning, psychological safety, and open communication that empowers your team to do their best work.

Taking a holistic view of these pillars is the first real step toward building an engineering organization that doesn't just ship features, but creates lasting value. It’s the difference between a team constantly fighting fires and one that is strategically building for the future.

When you weave these software engineering practices into your organization, you create a self-reinforcing system. High-quality code makes your processes run smoother. Reliable infrastructure supports better code. And an empowered team drives improvements across the board. Let's dig into how to make that happen.

The architectural decisions you make early on have consequences that will echo for years. It's not just a technical choice; it's a foundational one that dictates how your product will grow, how your team will work, and how much pain you'll endure down the road.

Think of your software's architecture as the blueprint for a skyscraper. If the foundation is shaky, you can’t just add more floors. At some point, the whole thing comes tumbling down under the weight of new features, more users, or a growing team. Getting this right is one of the most vital software engineering best practices because it doesn't just allow for growth—it makes it possible.

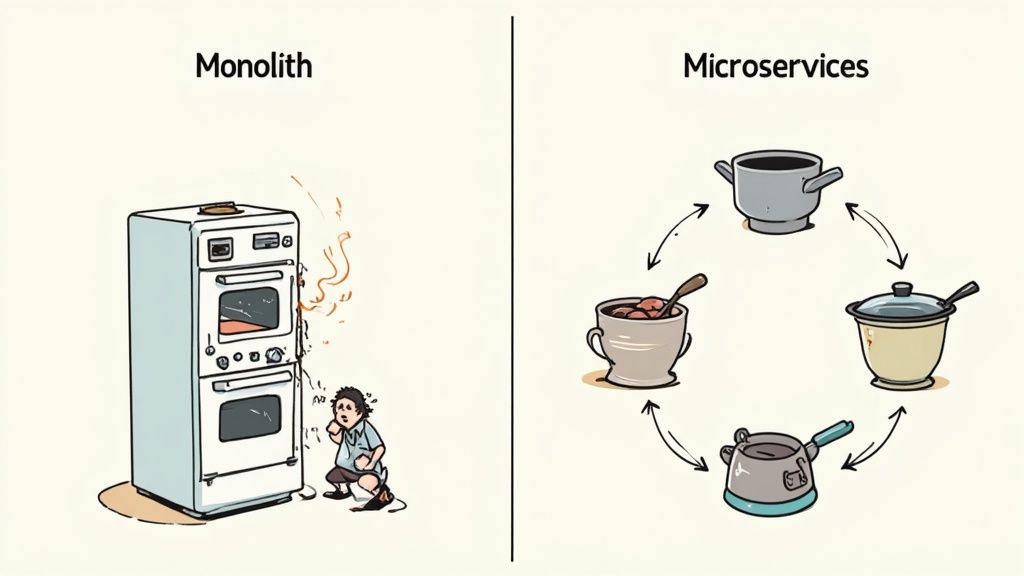

One of the first big debates you'll run into is whether to build a monolith or use microservices. Let's ditch the jargon and think about it like we're setting up a kitchen.

A monolithic architecture is like buying one of those giant, all-in-one kitchen stations. It has an oven, a stove, a microwave—everything bundled into a single unit. When you're just starting, this is great! It's simple, everything works together seamlessly, and you can get cooking right away.

The problem comes when something breaks. If the microwave fizzles out, the whole unit might have to go in for repair, leaving you unable to even bake a pizza. And what if you want to add an air fryer? That's a massive, risky operation that could mess up the oven.

A microservices architecture is the opposite. It’s like equipping your kitchen with separate, specialized appliances: a standalone oven, a microwave, a blender, and a toaster. If your blender breaks, you can just fix or replace it. The oven and toaster keep working just fine. Want to add an espresso machine? No problem. You just plug it in, and it doesn't affect anything else.

In the software world, this means a bug in your payment service (the blender) won't take down your product catalog (the oven). This ability to contain failures within a small "blast radius" is a huge win for keeping your application up and running.

So, which one should you choose? Honestly, anyone who gives you a definitive answer without knowing your situation is selling something. The right choice is always contextual—it depends on your team, your product's maturity, and what you're trying to accomplish.

For most startups just getting off the ground, starting with a monolith is often the smartest, most pragmatic move. It lets you build and ship features incredibly fast without getting bogged down in complex infrastructure. If you're in that early phase, our guide on how to build an MVP is a great resource for getting from idea to launch without overcomplicating things.

But as the team gets bigger and the product more complex, that once-speedy monolith can turn into a sluggish beast. That's usually the inflection point where breaking it apart into microservices starts to make a lot of sense.

Here’s a quick framework to help you think through the trade-offs:

| Factor | Favoring Monolith | Favoring Microservices |

|---|---|---|

| Team Size | A small, tight-knit team working in one codebase. | Multiple teams that need to work independently. |

| Product Stage | Early-stage, building an MVP, or a simple product. | A mature, complex product with many distinct functions. |

| Development Speed | Faster to get started and add the first few features. | Faster long-term, as teams can develop in parallel. |

| Operational Complexity | Low. You have one thing to build, test, and deploy. | High. You need strong DevOps skills to manage it all. |

No matter which path you take, a couple of other concepts are crucial for building software that lasts.

Domain-Driven Design (DDD) is a fancy term for a simple idea: build your software to reflect the real-world business it serves. By using the same language as your business experts (e.g., "shipment," "invoice," "customer"), you ensure your code is solving the right problems. It bridges the gap between the technical and business sides of the company.

And finally, your services will talk to each other through Application Programming Interfaces (APIs). You have to treat your APIs like sacred contracts. They need to be stable, well-documented, and reliable. When API contracts are solid, teams can work in parallel without stepping on each other's toes, trusting that the "agreement" between their services will hold. This discipline is what separates well-run engineering organizations from the chaotic ones.

A brilliant architecture is a great starting point, but it’s the day-to-day work of writing and testing code that actually brings an application to life. When we talk about high-quality code, we're not chasing some impossible standard of perfection. We're talking about clarity, consistency, and building a foundation that helps your team ship features quickly and with confidence.

This is where the rubber really meets the road.

It all begins with a few core principles. One of the most important is DRY, which stands for "Don't Repeat Yourself." Think about it like a recipe. If you have a specific method for making a fantastic tomato sauce, you write it down once and just reference that recipe anytime you need it. You don't copy and paste the entire sauce-making process into every single dish's instructions.

In code, this means taking a piece of business logic, wrapping it up in a single, reusable function, and calling it wherever you need it. If that logic ever needs an update, you change it in one place. This simple habit drastically cuts down on bugs and keeps your system consistent.

I've heard this a hundred times, especially from teams just starting to get serious about engineering discipline: "Writing tests just slows us down." On the surface, it feels true. But this is classic short-term thinking that creates massive long-term pain. The truth is, a solid testing suite is the single greatest accelerator you can give a development team.

Think of it as the safety net for a trapeze artist. Without it, every move is slow, cautious, and frankly, terrifying. With the net in place, the artist can attempt daring, complex maneuvers, knowing that a slip won’t be catastrophic. Automated tests give your developers that same confidence. They can refactor old code or add new features without the paralyzing fear of breaking something they can't see.

"A well-tested system is a developer's best friend. It transforms fear into confidence, allowing for bold changes and rapid innovation because you know the system will tell you if you've made a mistake."

To build this safety net, you need to think in layers:

Once the code is written and passing its tests, the next step is the peer code review. This is often misunderstood as a confrontational critique, but it’s one of the most powerful and collaborative tools you have. It’s more like an author handing their manuscript to a trusted editor. The goal isn't just to find flaws; it's to work together to make the final product as strong as it can be.

A healthy code review process does more than just improve the code. It builds a culture of shared ownership and continuous learning. Junior engineers learn from senior feedback, and senior engineers get fresh perspectives by seeing how others solve problems.

Here’s a simple checklist you can adapt for your team's pull requests:

Improving team velocity is a constant goal, and practices like these are fundamental to getting there. You can dig deeper into other strategies in our guide on productivity for developers.

Finally, you can take a whole category of arguments off the table during code reviews by automating your standards. This is where tools like linters and code formatters come in. A linter is like an automated proofreader that scans your code for stylistic errors, potential bugs, and any deviations from team conventions. A formatter automatically rewrites your code to ensure it follows a consistent visual style.

By building these tools right into your development workflow, you ensure every single line of code that gets committed looks and feels the same. This makes the entire codebase easier to read and lets reviewers focus on what actually matters: the logic and architecture of the solution.

Unfortunately, many of these software engineering best practices are still not universally adopted. A recent survey of over 650 engineering leaders revealed that even organizations who call themselves "DevOps mature" often skip these basic disciplines. But the leaders who did systematically implement them saw incredible results, including boosting feature deployment speed by up to 60% and cutting cloud costs by 15%. Discover more insights from the State of Software Engineering Excellence report.

Great architecture and clean code are the building blocks, but how do you reliably get those brilliant creations from a developer's machine into the hands of your users? The answer is automation. This is where you build a high-speed, automated assembly line for your software, getting rid of manual, error-prone steps and dramatically speeding up your time-to-market.

This assembly line is powered by Continuous Integration (CI) and Continuous Deployment/Delivery (CD). Think of CI/CD as the central nervous system of modern software development—a set of practices and tools that automate how code changes are built, tested, and deployed. This isn't just a technical upgrade; it's a fundamental business advantage.

So, what are we actually talking about here?

Continuous Integration is the habit of developers frequently merging their code changes into a central repository. Each time they merge, it automatically triggers a "build" and a whole suite of automated tests. The point is to catch integration bugs early and often, instead of waiting for a painful, large-scale merge day that nobody enjoys.

Continuous Deployment or Delivery takes this a step further. Once the code sails through all the automated checks in the CI stage, it’s automatically sent to a staging environment (Continuous Delivery) or even pushed straight into production (Continuous Deployment). This creates a seamless, low-friction path from an idea to a live feature.

The real power of a CI/CD pipeline is the rapid feedback loop it creates. Developers know within minutes if their change broke something, not days or weeks later. This allows teams to move faster with far greater confidence, knowing a safety net is always in place.

This process ensures that quality is built-in at every step, as demonstrated by the essential flow from writing code to reviewing and testing it.

This simple loop—write, review, test—is the heart of a reliable automated pipeline.

Here’s something that trips up a lot of teams: you can’t just buy a tool and suddenly "do DevOps." DevOps is a cultural shift. It’s about breaking down the traditional walls between the development team (Dev) and the operations team (Ops). In a true DevOps culture, everyone shares responsibility for the software's entire lifecycle, from the first line of code to supporting it in production.

This shared ownership fosters collaboration and gets everyone pulling in the same direction. Instead of developers "throwing code over the wall" to operations, teams work together to build reliable, scalable, and secure systems. To make this work, it's essential to understand what DevOps automation is and how it acts as the engine for these collaborative practices.

Ultimately, implementing DevOps and CI/CD are core software engineering best practices that pay off in real business wins:

Building your own automated delivery system involves several key stages, each with a clear purpose and a whole ecosystem of tools to help.

The table below breaks down the components of a typical CI/CD pipeline. Think of it as the journey your code takes from a developer's laptop to a live production environment.

| Stage | Purpose | Example Tools |

|---|---|---|

| Source Control | Manage and track changes to the codebase, enabling collaboration. | Git, GitHub, GitLab, Bitbucket |

| Build | Compile the source code into an executable artifact. | Jenkins, CircleCI, Travis CI, Docker |

| Test | Run automated tests (unit, integration, etc.) to validate code quality. | Jest, Pytest, Selenium, Cypress |

| Security Scan | Analyze code for vulnerabilities and check dependencies for known issues. | SonarQube, Snyk, Veracode |

| Deploy | Push the built artifact to a staging or production environment. | AWS CodeDeploy, Spinnaker, Argo CD |

| Monitor | Observe application performance and health in production. | Datadog, Prometheus, New Relic |

Each stage is a critical checkpoint, ensuring that only high-quality, secure, and well-tested code makes its way to your users. Getting this pipeline right is one of the most impactful things you can do for your engineering team's velocity and your product's stability.

You can have the most elegant code and the most sophisticated automation on the planet, but it's only half the battle. At the end of the day, software is built by people. This makes the human element the single most important—and often overlooked—part of engineering excellence.

The real magic isn't just about hiring a team of individual rockstars. It's about creating an environment where they can work together effectively, constantly learn from each other, and collectively produce their best work. This is where so many companies get it wrong. They pour money into the latest tech stacks but starve the very processes and culture that allow a group of developers to become a cohesive, resilient team.

Frameworks like Agile and Scrum get a bad rap. People often see them as a rigid collection of meetings and rules to follow. But that misses the point entirely. At their core, these are systems designed to do one thing really well: force communication, create transparency, and drive continuous improvement.

Think of the classic Scrum ceremonies not as mandatory meetings, but as built-in feedback loops for the team.

A team that runs genuinely effective retrospectives is a team that's built to last. It’s a clear signal that they’re committed to learning and that it’s safe to admit mistakes in order to fix the root cause.

Beyond any specific methodology, a few fundamental principles are the bedrock of any team that can attract and keep top-tier talent.

Clear Documentation: Good documentation is just empathy in written form—empathy for your future self and for your teammates. It dramatically cuts down onboarding time, clears up confusion, and gives engineers the autonomy they crave. It's the lubricant that keeps the whole machine running smoothly.

Psychological Safety: This is non-negotiable. It's the shared belief that you can take risks—like asking a "dumb" question, admitting you broke the build, or challenging a senior engineer's idea—without being shamed or punished. Without psychological safety, you get a culture of silence where small problems fester until they become full-blown disasters.

Effective Onboarding: A thoughtful, structured onboarding process is your first, best chance to show a new hire they made the right choice. A great experience gets them feeling welcome, valued, and productive quickly, setting a positive tone for their entire time with your company.

The new kid on the block is the AI-powered coding assistant, which has thrown a new variable into the team productivity equation. These tools promise to speed everything up, but just handing them out without adjusting your team's workflow can lead to some… interesting results.

For instance, the initial hype suggested massive productivity jumps were just around the corner. But the real-world data is starting to paint a more complex picture. One controlled study with experienced developers found that those using AI assistance actually took 19% longer to finish their tasks compared to those who didn't.

What's really fascinating is that despite being slower, these developers felt like the AI made them about 20% faster. This reveals a huge gap between perception and reality. You can dig into the full details of the complex impact of AI on developer productivity in the study.

This doesn't mean AI tools are a bust. It simply means that learning how to use them effectively is a software engineering best practice in itself. It requires new training, updated code review standards, and a focus on using AI to augment—not replace—a developer's critical thinking. Building a resilient and effective engineering culture is the ultimate goal, and that means thoughtfully integrating every tool and every person into a single, cohesive whole.

In the old days, security was often the last hurdle before a release, and "observability" was a fancy word for looking at logs after a crash. That approach just doesn't fly anymore. Today, security and observability aren't features you tack on at the end—they are fundamental to building good software.

Think of it like building a skyscraper. You wouldn't pour the foundation and then try to figure out where the steel beams or electrical wiring should go. You engineer them into the blueprint from the very beginning. That's exactly how we need to treat security and observability.

This mindset is often called “Shift Left Security” or DevSecOps. The idea is simple: move security from the end of the line (the right) to the very beginning (the left). Instead of a separate security team flagging issues right before a launch, your developers get the tools to find and fix potential problems while they’re still writing the code. It’s one of the single most effective ways to build safer, more reliable products.

Getting started with security integration doesn’t have to be some massive, complex project. You can begin by layering automated security checks directly into your CI/CD pipeline. These act as quality gates that every single code change has to pass through before it can move forward.

Here are a few essential starting points:

A great way to level up your security posture is to adopt a Zero Trust model. The core principle is 'never trust, always verify,' treating every request as if it could be a threat. You can learn how to implement zero trust security to build a much stronger, layered defense.

While security keeps the bad guys out, observability gives you a window into what’s happening inside your system. It's about instrumenting your code so you can ask any question about your application’s behavior and get a clear answer. Good observability means you can spot problems and fix them long before a customer ever notices.

Truly understanding your system comes down to three key data types, often called the pillars of observability.

By building these automated security checks and observability tools right into your development pipeline, you create a powerful system. Every release isn't just checked for new features; it's validated for security and equipped with the instrumentation needed to monitor its health in the wild. This builds a fantastic feedback loop, giving your team the confidence to ship faster because they know they have the visibility to handle whatever comes their way.

Even the best roadmaps hit a few bumps. It’s natural to have questions when you’re shifting how your team builds software. Let's tackle some of the most common ones that come up for founders and engineering leaders.

If you have to pick just one place to start, focus on peer code reviews and setting up a basic Continuous Integration (CI) pipeline. This two-pronged approach gives you the biggest bang for your buck by improving both code quality and your development process right away.

Code reviews are fantastic because they create a culture of shared ownership and immediately start spreading knowledge across the team. At the same time, a simple CI pipeline that runs your tests automatically on every new piece of code acts as a crucial safety net. It gives your developers the confidence to move faster without worrying they'll accidentally break everything.

This is the big one, isn't it? The key is to frame these changes as tools that make your engineers' lives easier, not as another layer of management bureaucracy. Think about what developers truly dislike: reworking code, dealing with vague requirements, and getting paged at 2 AM to fix a production fire.

Show them how automated testing means fewer late-night bug hunts. Explain how a solid CI/CD pipeline gets rid of mind-numbing manual deployment steps. The best way to do this is to start small. Pick a single enthusiastic team or a pilot project to prove the concept. Success is contagious. Once other developers see that team shipping faster with fewer headaches, they'll want in.

The goal is to show, not just tell. Connect every practice directly to a pain point your team experiences daily. When they see these practices as solutions to their problems, adoption becomes a pull, not a push.

If you look at the industry, Scrum is the clear front-runner. Data shows that about 63% of organizations practicing Agile use Scrum because of its structured, iterative approach. And with 68% of companies now using Agile at a company-wide level, its principles have proven to be incredibly effective. If you're curious, you can dig into more stats to see how leading companies are leveraging Agile.

But here’s the thing: the "best" framework is the one your team actually sticks with. Scrum is brilliant for teams that need structure, with its well-defined roles and meetings. On the other hand, Kanban is a fantastic, flexible alternative for teams whose work is more unpredictable. The most important thing is to just pick one, really commit to it, and then be willing to tweak it as you figure out what truly works for your team.

Stable Coin hired a senior full-stack engineer with a background in mobile development.

Marketers in Demand hired two senior WordPress developers with HireDevelopers.com

It's easy to get tangled up in the "Express.js vs. Node.js" debate, but here’s the thing: it’s not really a debate at all. They aren't competitors. In fact, they work together. Think of Node.js as the engine—it’s the powerful runtime that lets JavaScript do its magic outside of a web browser. But an engine on […]