Boosting developer productivity really comes down to three things: getting rid of systemic friction, automating the repetitive stuff, and building a culture of trust. It's all about creating an environment where engineers can ship high-quality, valuable software faster and with way less headache. What Developer Productivity Actually Means For way too long, the conversation around […]

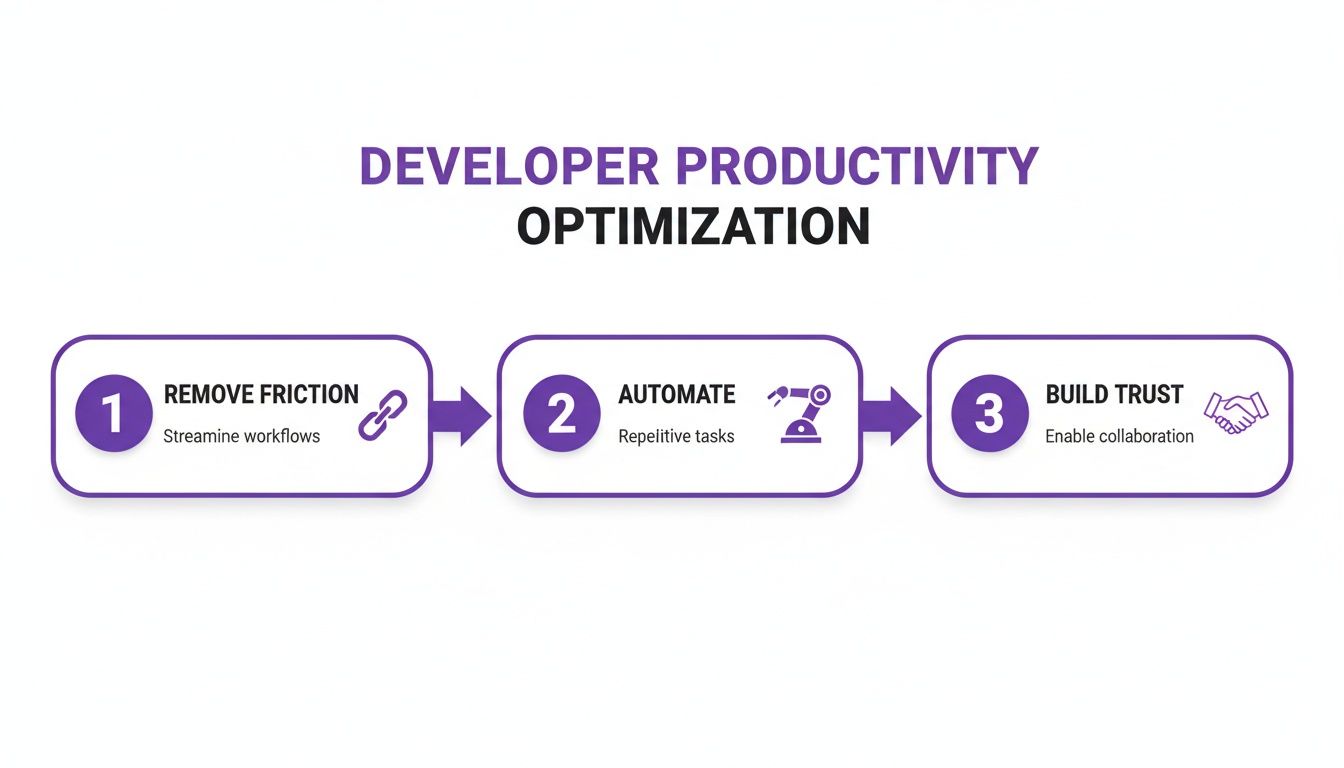

Boosting developer productivity really comes down to three things: getting rid of systemic friction, automating the repetitive stuff, and building a culture of trust. It's all about creating an environment where engineers can ship high-quality, valuable software faster and with way less headache.

For way too long, the conversation around developer productivity has been stuck in the past, fixated on metrics that are not just outdated but often do more harm than good. I’ve seen engineering leaders obsess over individual outputs like lines of code written, commits per week, or tickets closed. The problem? These numbers are easy to game and completely miss the point of what truly matters: delivering real business value, efficiently and for the long haul.

True productivity isn't about getting developers to type faster or clock more hours. It’s about building a system where smart, talented people can do their best work without running into constant roadblocks. This means we have to shift our focus from individual activity to team-level outcomes and the overall health of our development lifecycle.

A modern approach to developer productivity is built on creating an outstanding developer experience. It’s simple: when engineers are satisfied, engaged, and have the right tools and processes, they naturally become more effective.

This guide is built around these core pillars. They represent the key areas where you can make a real, measurable impact on your team's efficiency and happiness.

| Pillar | Core Focus | Key Outcome |

|---|---|---|

| Workflows & Processes | The path from local development to production. | Fast, reliable, and frictionless software delivery. |

| Tooling & Automation | Equipping teams with smart, efficient tools. | Reduced cognitive load and elimination of manual toil. |

| Culture & Experience | The human side of software development. | High morale, psychological safety, and talent retention. |

| Metrics & Measurement | Using data to find and fix real bottlenecks. | Informed, system-level improvements, not micromanagement. |

By focusing on these areas, you move beyond simplistic, individual-based metrics and start optimizing the entire system that produces your software.

The goal is not to measure the output of individuals but to understand the health and velocity of the entire software delivery system. A rising deployment frequency or a lower change failure rate is a far better indicator of productivity than any individual's commit count.

Ultimately, investing in these areas creates a powerful feedback loop. A great developer experience cuts down on friction, which in turn boosts morale and job satisfaction. This leads to less burnout, better retention of your top talent, and a much greater capacity for innovation.

When you figure out how to improve developer productivity, you’re not just tweaking a process—you're building a more resilient and impactful engineering organization. The result is better software, shipped faster, which gives you a serious competitive edge.

The path from a developer’s laptop to a live production server is often littered with frustrating roadblocks. Every manual handoff, every slow build, every delayed code review—they all act as speed bumps, killing momentum and forcing expensive context switches. If you really want to boost developer productivity, your focus needs to be on this lifecycle. Make it fast, automated, and as frictionless as possible.

This whole process is what we call a CI/CD (Continuous Integration/Continuous Deployment) pipeline, and it's the central nervous system of any high-performing engineering team. Get it right, and it provides the rapid feedback developers need to stay in the zone. Let it languish, and it becomes a constant source of pain.

The core idea is simple, really. You’re just trying to remove friction, automate what you can, and build trust in the system.

This flow shows that it’s not just about the tech. Automation is great, but it has to be built on a foundation of trust and a real commitment to getting rid of the hurdles holding your team back.

A great CI/CD pipeline is so much more than an automation script; it’s a developer's most important feedback loop. Its one job is to answer a single question, and answer it fast: "Did my change break anything?" Pipelines that are slow, flaky, or cryptic are absolute productivity killers.

I once worked with a startup where the full test suite took 45 minutes to run. A developer would push a change, go make coffee, get pulled into a meeting… and by the time the build failed, they’d completely forgotten what they were working on. That’s a colossal waste of time and mental energy.

Here’s how you build a pipeline that gives your team a real edge:

The gold standard I’ve seen is a feedback loop under 10 minutes. This is the magic number. It allows a developer to stay focused, push a commit, and get a clear pass/fail signal before their attention drifts elsewhere.

Keep in mind that a major drag on any pipeline is technical debt. Investing time in understanding and managing technical debt is non-negotiable for keeping things moving. Every shortcut and piece of messy code makes builds more complex and tests more brittle, directly slowing you down.

In most organizations, the biggest human bottleneck in the entire process is the code review. When they're treated as a bureaucratic checkpoint, they just create long wait times and an adversarial "me vs. you" dynamic. The real goal isn't to nitpick every tiny flaw; it's to share knowledge and ensure quality without grinding everything to a halt.

You need to shift the culture. Turn your code review process from a gate into a moment for collaborative learning.

Here’s how you can make reviews faster and more effective:

Just picture a developer waiting two days for feedback on a critical feature. By the time the comments finally roll in, they've already switched gears to another project. The cost of that context switch is astronomical. In contrast, a team with a rapid review culture just flows.

The right tools can be a massive force multiplier for your engineering team. The wrong ones? They just create more noise and cognitive overhead. When we talk about improving developer productivity, it’s about strategically adopting new tech that genuinely helps, not just adding another subscription to the monthly bill. The focus has to be on tools that integrate smoothly and deliver a clear, measurable return.

Nowhere is this more obvious than with the boom in AI coding assistants. These tools promise to take on the repetitive, boilerplate tasks that drain a developer's creative energy. Think generating unit tests, writing documentation, or scaffolding entire components. This frees up your senior engineers for the high-level architectural thinking that actually drives innovation.

This is a classic example of an AI coding assistant in action—giving real-time suggestions right inside the IDE. The magic here is the immediate feedback and code completion that keeps a developer deep in a flow state, eliminating the need to constantly switch context.

The hype around AI-powered tools is everywhere, but the data is finally starting to back it up. Field studies with over 4,800 developers have shown that AI tools deliver a very real 26.08% average productivity boost. This isn't just theory; it’s from real-world experiments where developers using AI coding assistants finished their tasks significantly faster.

Even more telling, 68% of these developers reported saving more than 10 hours per week. That's basically a full extra workday they get back to focus on complex problem-solving instead of routine coding. The gains come from AI handling the tedious stuff like boilerplate code, initial bug fixes, and writing tests.

But it’s not a silver bullet. The hidden cost of AI can be the time spent debugging "almost right" code. An assistant might generate a function that looks perfect at a glance but contains a subtle logical flaw that takes an hour to hunt down. This is where a thoughtful evaluation process becomes critical.

Before you roll out any new tool team-wide, run a pilot program. Get a small, cross-functional group to use it and measure their experience. Collect qualitative feedback through surveys and quantitative data like cycle time and PR size to make a decision based on facts, not hype.

Adding a new tool should solve a clear problem, not just follow the latest trend. Whether you're looking at a project management app or an AI assistant, run it through these critical questions first.

To keep a pulse on what’s out there, it's worth exploring discussions around new tools that can enhance productivity. Staying informed helps you spot the genuine game-changers from the fleeting fads.

While AI gets most of the headlines, other tools are just as vital for a productive engineering environment. Focusing on the right toolset is a core part of improving overall productivity for developers.

Don't overlook these categories that can have a massive impact:

The goal is to build a cohesive ecosystem where every tool has a clear purpose and plays well with the others. You want to avoid "tool sprawl," where your team is juggling a dozen different platforms that don't talk to each other. A streamlined, integrated toolchain is the bedrock of a high-velocity team.

Let's be honest: you can have the slickest CI/CD pipeline and the smartest AI tools, but if your team culture is broken, none of it really matters. At the end of the day, developer productivity is a human challenge, not just a technical one. The real force multiplier is an environment built on trust, autonomy, and psychological safety.

This isn’t about catered lunches or office perks. It's about creating a space where engineers feel genuinely empowered to do their best work without looking over their shoulder. It’s the foundation that all truly high-performing teams are built on.

Psychological safety is a fancy term for a simple, powerful idea: your team feels safe enough to take risks. It means an engineer can ask a "dumb" question, challenge a senior dev's assumption, or—most importantly—admit a mistake without fearing humiliation or punishment.

When that safety is missing, developers go silent. They’ll spend hours stuck on a bug rather than ask for help, worried they'll look incompetent. They won't flag a potential disaster in a project plan because they don't want to be labeled "negative." That silence is a productivity killer.

In a psychologically safe environment, "I broke the build" is met with, "Okay, let's figure out how to fix it together," not, "How could you be so careless?" This blameless approach turns failures into learning opportunities, not career-limiting events.

Cultivating this requires real effort from leadership. It means you publicly celebrate the engineer who flagged a critical issue early, even if it meant delaying a launch. It means managers openly admitting their own mistakes to show that vulnerability is a strength, not a weakness.

If you want to absolutely crush an engineer's motivation, micromanage them. Nothing kills productivity faster than telling smart, creative people not just what to do, but exactly how to do it. The best work happens when you give your team a clear goal and then get out of their way.

Real ownership isn't just about assigning tickets. It’s about nurturing a deep sense of responsibility for the outcome. You do that by bringing developers into the decision-making process from the very beginning.

This isn’t about losing control; it’s about building trust. When your team has genuine autonomy, they stop being ticket-takers and start becoming problem-solvers.

Burnout isn't a badge of honor or a sign of weakness; it's a symptom of a systemic failure. A burned-out developer isn't just unproductive—they are actively disengaged, make more mistakes, and are a massive flight risk. Protecting your team's mental energy is a direct investment in your company's future.

Cognitive load is the enemy here. A developer juggling three "urgent" projects while being bombarded by Slack notifications and back-to-back meetings simply cannot produce quality work.

Here are a few battle-tested strategies to stop the spiral:

A supportive culture isn't a "nice-to-have." It is the engine that drives sustainable high performance, turning a group of individual coders into a cohesive, innovative, and highly productive engineering team.

If you can't measure it, you can't improve it. But you have to measure the right things. Forget vanity metrics like lines of code; they tell you nothing about value. The real key to understanding and boosting developer productivity is using data that reflects the health of your entire delivery process. This is exactly what DORA metrics were designed for.

Born from years of rigorous research by the DevOps Research and Assessment (DORA) team, this framework gives you a clear, objective view into your team's software delivery performance. It shifts the focus from individual output to system-level outcomes, turning murky "gut feelings" about productivity into concrete, data-driven insights.

DORA is built on four core metrics that, together, create a holistic picture of your team's ability to deliver value to users—balancing both speed and stability.

Deployment Frequency: How often are you pushing code to production? Elite teams often deploy on-demand, sometimes multiple times a day. If your deployments are a weekly or monthly event, it might point to bottlenecks in your review process or a shaky CI/CD pipeline.

Lead Time for Changes: This measures the time from a developer committing a change to that change actually running in production. It’s the A-to-Z of your delivery pipeline. A long lead time is often a symptom of slow code reviews, manual testing phases, or overly complex deployment procedures.

Change Failure Rate: What percentage of your deployments blow up in production? This is a raw, unfiltered look at your quality and stability. A high failure rate is a massive red flag, pointing to problems in your testing, code quality, or review standards.

Time to Restore Service: When something inevitably breaks, how fast can you fix it? This metric shows how resilient your system is and how quickly your team can respond to incidents, debug issues, and roll out a fix. Top-tier teams often recover in less than an hour.

These four metrics create a necessary tension. Deployment Frequency and Lead Time are all about speed, while Change Failure Rate and Time to Restore are about stability. Pushing for speed at the expense of stability is a surefire way to burn out your team and frustrate your users. For a broader look at how these fit into your strategy, it’s worth exploring different KPIs for software development that complement the DORA framework.

The real magic of DORA isn't in the numbers themselves, but in the conversations they start. These metrics should never be weaponized to compare individual developers or micromanage their work. Think of them as a diagnostic tool for the entire system—a shared scoreboard that helps everyone spot where things are getting stuck.

DORA metrics are the starting point for a conversation, not the final word on performance. A rising Change Failure Rate isn't a reason to point fingers; it's a signal to ask, "What in our process is letting more bugs slip through?"

Let’s say you notice your team's Lead Time for Changes has been slowly creeping up over the past month. Instead of just telling people to "work faster," you can dig into the data together. Is the code review queue constantly backed up? Are build times getting longer? Is the staging environment flaky? The metric guides you to the problem area, so the team can collectively find the root cause and fix it.

With AI tools becoming a fixture in every developer's workflow, DORA metrics are more important than ever for cutting through the hype and measuring real impact. It might be surprising, but recent findings show that just throwing AI at a problem doesn't guarantee a productivity boost—it can even slow down experienced developers.

The trick is to be strategic. Use AI to attack toil: automating documentation, generating boilerplate tests, or assisting with code reviews. That’s where the real wins are. For instance, Booking.com saw a 16% throughput gain by using AI this way, which resulted in higher merge rates (+10-20%) and smaller pull requests (down 15-25%).

DORA metrics can help you track this kind of success. You might set a goal to double your weekly deploys to 2-3 per week and cut your lead times, all while ensuring your change failure rate stays low. It's an interesting landscape; while 69% of AI agent users feel their personal productivity has improved, only 17% are seeing that translate to a significant team-level impact yet. You can discover more insights from this 2025 AI developer study to get the full story.

Quantitative data is powerful, but it's only half the story. The numbers can tell you what is happening, but they rarely tell you why. To truly understand your team's productivity, you have to pair the hard data from DORA with qualitative feedback from the people doing the work.

When you combine the "what" from DORA metrics with the "why" from your engineers, you create a powerful, continuous improvement engine. It's a balanced approach that ensures you're not just optimizing a process—you're genuinely improving the developer experience.

Even with a solid game plan, engineering leaders always run into practical questions when it's time to execute. Getting the human and technical sides of developer productivity right takes a bit of finesse. Here are some straightforward answers to the questions I hear most often.

If you're running a small team, forget about boiling the ocean. Don't get bogged down trying to implement every DORA metric and shiny AI tool at once. Your biggest wins will come from tackling the most significant points of friction first.

The best way to find them? Hold a team retrospective and ask one simple question: "What's the most frustrating part of your day-to-day work?"

Listen carefully to the answers. They'll point you straight to your bottlenecks. In my experience, it almost always comes down to a few key areas:

A small win, like cutting build times in half, gives your team an immediate boost and builds the momentum you need for the bigger, more complex improvements down the road.

This is a big one, and the distinction is critical: you measure the health of the system, not the output of individuals. Framing it this way is the key to building trust. Metrics should be diagnostic tools that help the team spot areas for improvement together, never a leaderboard to rank engineers.

Focus entirely on team-level outcomes. If the Change Failure Rate is creeping up, that's not one person's performance problem. It's a signal for the whole team to look at the testing process or review practices. The conversation should always be, "How can we make this better?"

Use metrics like DORA to get a read on the efficiency of your delivery process. Are deployments getting faster? Is lead time shrinking? These numbers reflect your collective effort. But don't stop there. Always pair this quantitative data with qualitative feedback from your one-on-ones and team health checks. This gives you the full story—the "what" from the data and the "why" from your people.

Not always. The real value of an AI coding assistant like GitHub Copilot depends entirely on your team's context. They're fantastic at churning out boilerplate code, writing unit tests, and solving well-defined problems, especially in modern codebases.

However, their usefulness can drop off a cliff when you're dealing with a complex legacy system or a highly specialized, niche domain. In those cases, developers can end up spending more time correcting "almost right" AI suggestions than they would have spent just writing the code themselves. The tool becomes a hindrance, not a help.

The best way to know for sure is to run a small, controlled experiment.

This gives you real-world data from your own team, so you can make a decision based on results, not just industry hype.

Let's be blunt: the fastest way to get a world-class remote developer on your team is to use a specialized talent platform. This isn't just about speed; it's about sidestepping the entire traditional recruiting circus. Instead of spending months sifting through résumés and endless interviews, you get direct access to a curated, global pool of […]

Validating a startup idea is all about systematically testing your core assumptions before you sink a fortune into building the full product. It's a hunt for cold, hard evidence from real people that the problem you see is a problem they feel—and that your solution is something they’d actually open their wallets for. This process […]

The very nature of software engineering is changing. It's moving away from a world of pure, manual coding and toward one of strategic system design. Fueled by AI automation, the rise of cloud-native architecture, and a truly global talent market, the engineer's role is evolving. We're seeing a shift from hands-on builder to high-level architect—someone […]