Code reviews are a critical pillar of modern software development, yet they often devolve into a rubber-stamping exercise or a source of friction. The difference between a high-performing engineering team and a struggling one frequently lies in their approach to this fundamental practice. A superficial "LGTM" (Looks Good To Me) offers little value, while overly […]

Code reviews are a critical pillar of modern software development, yet they often devolve into a rubber-stamping exercise or a source of friction. The difference between a high-performing engineering team and a struggling one frequently lies in their approach to this fundamental practice. A superficial "LGTM" (Looks Good To Me) offers little value, while overly nitpicky or adversarial reviews can damage morale and slow down delivery. The goal is to find a productive middle ground where reviews are both efficient and effective.

Effective reviews don't just catch bugs; they are a powerful mechanism to distribute knowledge, enforce standards, mentor developers, and build a culture of collective ownership over the codebase. When done right, the process strengthens the entire team, making the software more robust, maintainable, and secure. A well-structured review process is an investment that pays dividends in quality and velocity. For a comprehensive overview of how to maximize the impact of your review process, explore these best practices for code review that help ship better code efficiently.

This guide moves beyond generic advice to provide a definitive list of actionable code review best practices you can implement immediately. We will cover everything from structuring pull requests and leveraging automation to fostering a positive feedback culture and using metrics for continuous improvement. Whether you're a startup founder, a CTO, or a developer, these strategies will help you transform your team's review process from a necessary chore into a powerful engine for shipping high-quality, resilient software. You will learn how to set clear goals, define responsibilities, integrate security checks, and conduct process retrospectives to ensure your code reviews deliver maximum value.

A code review without clear goals is like a journey without a destination. Reviewers offer subjective feedback, authors get frustrated, and the process fails to improve code quality. Establishing explicit objectives is one of the most fundamental code review best practices because it transforms the review from a mere formality into a powerful quality assurance mechanism. By defining what to look for, you ensure every review is consistent, objective, and aligned with team-wide engineering principles.

These standards are more than just style guides; they are a comprehensive blueprint for quality. They should cover everything from architectural patterns and security vulnerabilities to performance benchmarks and code readability. For instance, Google's well-documented engineering practices emphasize small, focused changes and rigorous standards for code health, a model many successful teams emulate.

To effectively implement these goals, document them in a centralized, accessible location like a team wiki or a repository in your version control system. This single source of truth prevents ambiguity and serves as a reference for both new and experienced developers.

By setting these foundational rules, you create a culture of predictable quality. This approach aligns with broader principles of engineering excellence. For a deeper dive into creating robust technical foundations, you can explore more on these software engineering best practices.

Attempting to review a massive pull request with thousands of lines of code is a recipe for disaster. Reviewer fatigue sets in quickly, crucial bugs are missed, and feedback becomes superficial. One of the most impactful code review best practices is to keep changesets small and focused on a single responsibility. This dramatically improves the reviewer's ability to thoroughly analyze the logic, spot subtle errors, and provide meaningful feedback, ultimately leading to higher-quality code.

This principle is validated by major tech companies. Studies from Google and Microsoft show a direct correlation between the size of a change and the quality of the review. Smaller reviews, often capped at 200-400 lines, receive more comments per line and are completed much faster. By limiting the scope, you transform a daunting task into a manageable and effective quality gate.

Integrating this practice requires a shift in how developers approach feature development, encouraging incremental commits over monolithic changes. A key strategy is to break down large features into a series of smaller, logical pull requests that can be reviewed and merged independently.

By adopting this discipline, you not only improve review quality but also accelerate the development cycle. Small changes are easier to merge, reduce the risk of complex conflicts, and make the entire code integration process smoother and more predictable. This approach aligns with agile principles of iterative development and continuous delivery. For more on optimizing developer workflows, you might find valuable insights in this guide on how to hire developers with experience in agile environments.

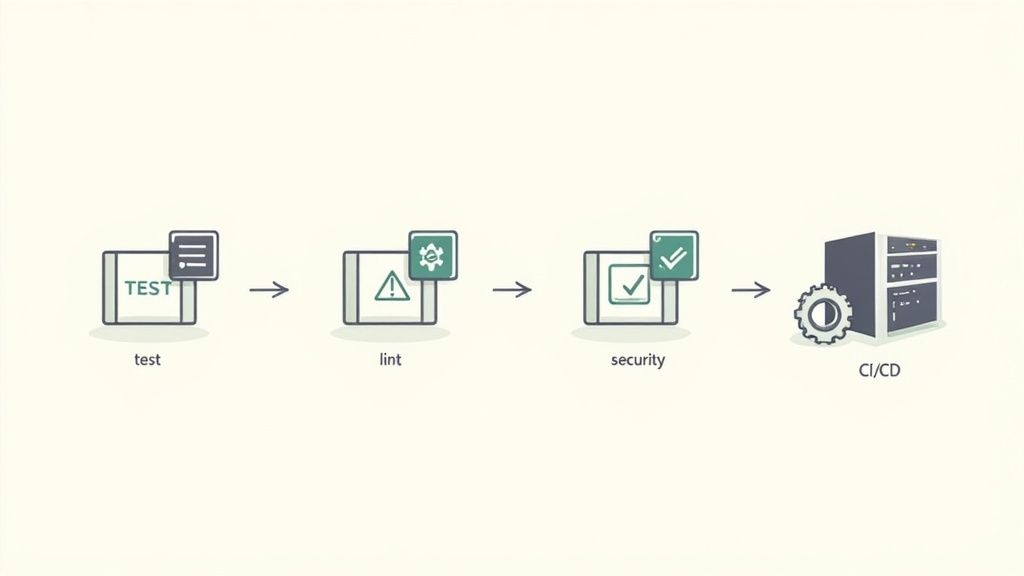

Manual code reviews are essential for catching nuanced logic and architectural flaws, but they are an expensive use of a developer's time for issues that a machine can find. Automating initial checks is one of the most impactful code review best practices because it allows human reviewers to focus their valuable cognitive energy on complex problem-solving. By integrating automated testing and analysis into a Continuous Integration/Continuous Deployment (CI/CD) pipeline, you create a powerful quality gate that filters out common errors before they ever reach a human reviewer.

This automated process acts as the first line of defense, ensuring that every pull request has already passed a baseline of quality. Platforms like GitHub Actions, GitLab CI/CD, and Jenkins can automatically run unit tests, security scans, and linters, providing immediate feedback. A pull request that fails these checks can be blocked from merging, saving the team countless hours by catching bugs early.

Integrating automation effectively requires a well-structured pipeline that provides clear, actionable feedback directly within your version control system. For seamless integration of automated testing, adhering to robust CI/CD Pipeline Best Practices is essential, ensuring that code reviews are efficient and effective within the delivery process.

By offloading repetitive checks to automated systems, you elevate the role of the human reviewer. Instead of checking for missing semicolons, they can analyze architectural integrity, question logical assumptions, and mentor fellow developers on more substantive issues.

A toxic code review environment crushes morale, stifles innovation, and ultimately hurts code quality. The most effective reviews are not adversarial but collaborative, treating each interaction as a mentorship opportunity. Fostering a culture of respectful, constructive feedback is one of the most critical code review best practices because it transforms the process from a judgmental gate into a shared learning experience. When feedback is specific, actionable, and delivered with positive intent, developers feel empowered to grow, leading to a stronger team and a better product.

This philosophy is championed by companies like Etsy, which built a culture of blameless peer reviews to encourage experimentation and learning. The goal is not to criticize the author but to improve the code together. Instead of making demands, reviewers should ask questions and offer suggestions, shifting the tone from accusatory to inquisitive. This approach ensures that every comment serves to educate and elevate, rather than to find fault.

Building a positive review culture requires intentional effort and consistent modeling of desired behaviors. It starts with framing feedback in a way that is helpful and empathetic, focusing on the code's logic and impact, not the person who wrote it.

d is unclear; could we rename it to elapsedTimeInSeconds for clarity?"A code review process with ambiguous roles quickly descends into chaos. When everyone is a reviewer, no one is accountable, leading to superficial checks or, worse, complete neglect. One of the most critical code review best practices is defining who reviews what and who has the final say. By establishing clear roles, you ensure that every change receives appropriate scrutiny from the right experts, transforming reviews from a bottleneck into an efficient quality gate.

This clarity prevents diffusion of responsibility and empowers specialists to focus on their areas of expertise, whether that's security, performance, or system architecture. For example, the Linux kernel's maintainer hierarchy and GitHub's CODEOWNERS feature are both successful models that formalize ownership and streamline the approval process, ensuring changes are vetted by those most qualified to assess them.

Formalizing roles and responsibilities requires documenting them clearly and integrating them directly into your workflow. The goal is to make the correct assignment of reviewers automatic and transparent, removing guesswork for the author.

CODEOWNERS file or GitLab's Approval Rules to automatically assign specific individuals or teams to review changes in certain parts of the codebase.Defining these roles is foundational to an organized and effective development lifecycle. To learn more about how these duties fit into a broader team structure, you can explore a detailed breakdown of roles in agile software development.

A pull request sitting unreviewed is a bottleneck that halts development momentum and creates context-switching overhead. Establishing clear response time expectations, or Service Level Agreements (SLAs), is a crucial code review best practice that prevents code from languishing. It transforms the review process from a passive waiting game into a predictable, efficient part of the development lifecycle, ensuring that valuable changes are integrated promptly.

Defining these SLAs creates a shared understanding of urgency and responsibility. It provides authors with a clear timeline for feedback and empowers reviewers to manage their time effectively. For instance, teams at Microsoft often aim for a 24-hour review turnaround, while Uber implements priority-based tiers to ensure critical fixes are addressed first. This structure minimizes delays and keeps the entire engineering pipeline flowing smoothly.

To make SLAs effective, they must be realistic, communicated clearly, and tracked consistently. Document these expectations in your team's central knowledge base and integrate them into your workflow.

By implementing these time-based expectations, you build a resilient and high-velocity review process. This proactive approach ensures that code moves forward efficiently, reducing developer frustration and accelerating delivery.

Relying solely on human reviewers to catch everything from style violations to complex architectural flaws is inefficient and prone to error. The most effective code review processes combine the precision of automation with the nuanced insight of human expertise. This hybrid approach is a cornerstone of modern code review best practices, as it frees developers from tedious, repetitive tasks and allows them to concentrate on what they do best: solving complex problems.

Automated tools excel at enforcing objective standards like code formatting, linting, and identifying known security vulnerabilities. This creates a consistent baseline for quality before a human ever sees the code. For example, GitLab integrates security scanning directly into its CI/CD pipelines, flagging potential issues automatically. This leaves the manual review phase to focus on higher-level concerns like architectural soundness, logical correctness, and long-term maintainability.

Integrating this balanced approach involves setting up your development pipeline to handle objective checks first, presenting a clean and compliant changeset for human analysis. This strategic layering saves time and reduces cognitive load on your team.

By offloading objective, repeatable checks to machines, you empower your developers to perform more meaningful, high-value reviews. This not only improves code quality but also makes the review process faster and more engaging for everyone involved.

What gets measured gets improved. Without data, efforts to enhance your code review process are based on guesswork, not evidence. Documenting and tracking key metrics is one of the most impactful code review best practices because it provides objective insights into process efficiency, team health, and code quality trends. It helps you identify bottlenecks, prevent reviewer burnout, and make data-driven decisions to refine your workflow.

These metrics transform abstract goals like "faster reviews" into concrete, measurable objectives. By analyzing data on review time, approval rates, and defect escape rates, teams can pinpoint specific areas for improvement. For example, platforms like LinearB and the built-in analytics in GitLab and GitHub provide dashboards that visualize these trends, similar to how engineering giants like Google and Facebook use internal tools to monitor and optimize their development cycles at scale.

The goal is not to micromanage but to gain a holistic understanding of your process health. Start by identifying metrics that align with your team's quality and velocity goals, and ensure they are tracked transparently.

By tracking the right data, you turn your code review process into an evolving system that continuously improves. For a deeper look into relevant performance indicators, you can explore more about these key performance indicators for software development.

Treating security as an afterthought is a costly mistake that leaves applications vulnerable to attack. A security-focused code review shifts this critical concern "left," integrating it directly into the development lifecycle rather than leaving it for a final, pre-deployment check. This approach is one of the most impactful code review best practices because it proactively identifies and mitigates vulnerabilities like data leaks, injection flaws, and insecure configurations before they ever reach production. By embedding security into every review, you cultivate a defense-in-depth mindset across the entire engineering team.

This practice transforms the review process into an active security gateway. It goes beyond functional correctness and code style to scrutinize changes for potential exploits. For instance, Microsoft's Secure Development Lifecycle (SDL) mandates security reviews as a core component, a practice that has significantly reduced vulnerabilities in its products. Similarly, following guidelines like the OWASP Top 10 during reviews provides a structured framework for spotting the most common web application security risks.

Integrating security effectively requires a combination of automation, manual inspection, and team education. The goal is to make security a shared responsibility, not just the domain of a specialized team.

By making security an explicit and mandatory part of the review process, you reduce organizational risk and build more resilient, trustworthy software.

A code review process, no matter how well-designed, can become stale or inefficient over time. Without a mechanism for feedback, friction points, tool limitations, and cultural issues can fester, reducing the effectiveness of your reviews. One of the most critical code review best practices is to treat the process itself like a product: continuously iterate and improve it through regular retrospectives. This practice ensures your review culture evolves with your team, tools, and project demands.

By dedicating time to analyze what’s working and what isn’t, teams can proactively address bottlenecks and refine their approach. Companies like Spotify and Slack integrate this philosophy into their engineering culture, using regular feedback loops, similar to agile sprint retrospectives, to ensure their development practices remain optimized and developer-friendly. This transforms the review from a static process into a dynamic, team-owned system.

Integrating retrospectives into your workflow is straightforward and yields significant returns on team health and productivity. The key is to create a safe, structured environment where everyone feels comfortable sharing honest feedback.

| Practice | Implementation complexity | Resource requirements | Expected outcomes | Ideal use cases | Key advantages |

|---|---|---|---|---|---|

| Establish Clear Code Review Goals and Standards | Moderate — document standards and align team | Time to write docs; linters/formatters | Consistent evaluations; measurable quality gains | Growing teams; onboarding new reviewers | Reduces ambiguity; speeds reviews |

| Keep Reviews Small and Focused | Low–Moderate — policy and discipline | PR size limits; process enforcement | Faster reviews; higher issue detection | High-velocity development; frequent PRs | Better comprehension; easier rollbacks |

| Implement Automated Testing and CI/CD Integration | High — pipeline design and integration | CI infra, test suites, maintenance effort | Early bug detection; faster feedback loops | Continuous delivery; large codebases | Removes trivial review work; consistent checks |

| Foster a Collaborative Culture and Provide Constructive Specific Feedback | High — cultural change and coaching | Training, time for thoughtful comments | Knowledge sharing; improved morale and retention | Teams prioritizing learning and mentorship | Stronger team cohesion; developer growth |

| Establish Clear Roles and Responsibilities | Moderate — define owners and escalation | CODEOWNERS, role docs, reviewer rotation | Clear accountability; faster decisions | Complex systems; regulated projects | Ensures expert reviews; reduces bottlenecks |

| Set Response Time Expectations and SLAs | Low–Moderate — agree SLAs and monitor | Tracking tools, dashboards, team buy-in | Predictable turnaround; less stagnant PRs | Time-sensitive delivery teams | Improves predictability; measurable SLAs |

| Balance Automated and Manual Review | Moderate — select/tune tools + process | Tooling plus reviewer training | Efficient reviews; covers objective + subjective issues | Mature teams mixing automation and human review | Optimal reviewer time use; fewer blindspots |

| Document and Track Review Metrics | Moderate — implement measurement & reporting | Analytics tools, dashboards, data collection | Identifies bottlenecks; informs improvements | Teams aiming for data-driven process change | Objective insights; continuous improvement |

| Implement Security-Focused Review Practices | High — integrate security checks and expertise | SAST/DAST tools, security reviewers, training | Fewer vulnerabilities; improved compliance | Security-sensitive or regulated applications | Early detection of security issues; stronger posture |

| Conduct Regular Code Review Process Retrospectives | Low–Moderate — schedule and facilitate retros | Meeting time, surveys, facilitation | Iterative process improvements; removed friction | Agile teams; distributed groups seeking refinement | Adapts process to team needs; increases ownership |

Mastering the art and science of code review is not a destination but a continuous journey. It's an iterative process of refinement, collaboration, and commitment that transforms a simple quality check into the cornerstone of a high-performing engineering culture. Throughout this guide, we've explored a comprehensive set of code review best practices, from the foundational need for clear goals to the advanced strategy of conducting process retrospectives. These are not just items on a checklist; they are the interlocking gears of a well-oiled machine designed to produce superior software.

The path to excellence begins with a shared understanding. When your team collectively agrees on standards, keeps pull requests small and focused, and integrates powerful automation through CI/CD pipelines, you eliminate ambiguity and reduce cognitive load. This foundation allows the human element of review to shine, turning critiques into collaborative coaching sessions rather than adversarial judgments.

As you move forward, keep these core principles at the forefront of your process. They represent the most critical shifts in mindset and methodology that separate good teams from great ones:

Transforming theory into practice requires deliberate action. Here’s a simple roadmap to get you started on implementing these code review best practices immediately:

Ultimately, a world-class code review process is a reflection of a team dedicated to collective ownership and shared excellence. It’s an investment that pays dividends in code quality, system stability, and developer growth. Each review is a small but significant step toward building a more robust, maintainable, and secure product. By embracing these principles, you are not just checking code; you are building a lasting culture of quality, one review at a time.

Stable Coin hired a senior full-stack engineer with a background in mobile development.

Marketers in Demand hired two senior WordPress developers with HireDevelopers.com

It's easy to get tangled up in the "Express.js vs. Node.js" debate, but here’s the thing: it’s not really a debate at all. They aren't competitors. In fact, they work together. Think of Node.js as the engine—it’s the powerful runtime that lets JavaScript do its magic outside of a web browser. But an engine on […]