Validating a startup idea is all about systematically testing your core assumptions before you sink a fortune into building the full product. It's a hunt for cold, hard evidence from real people that the problem you see is a problem they feel—and that your solution is something they’d actually open their wallets for. This process […]

Validating a startup idea is all about systematically testing your core assumptions before you sink a fortune into building the full product. It's a hunt for cold, hard evidence from real people that the problem you see is a problem they feel—and that your solution is something they’d actually open their wallets for.

This process is your single best defense against building something nobody wants.

Let’s be brutally honest: a great idea is worthless without a market. The startup graveyard is overflowing with brilliant concepts that never found an audience. Most startups don't fail because of weak teams or a lack of funding; they fail because they build something nobody needs.

This is where learning how to validate a startup idea becomes your unfair advantage. It’s not some boring item on a checklist; it's the compass that guides you from a back-of-the-napkin sketch to a real, thriving business.

The statistics are a wake-up call. The U.S. Bureau of Labor Statistics reports that a staggering 20% of new businesses go under in their first year. And for startups? The numbers are even grimmer, with some estimates putting the failure rate as high as 90%. Validation turns uncertainty into actionable intelligence, stress-testing your assumptions against the unforgiving reality of the market.

Look at the spectacular flameout of Quibi. They stormed onto the scene in 2020 armed with $1.75 billion in funding and a star-studded lineup, only to shut down six months later. Their core assumption—that people craved high-production, Hollywood-style shows in five-minute chunks on their phones—was never really proven. They built a massively expensive product based on a hunch.

Now, contrast that with Dropbox. Back in 2007, Drew Houston didn't start by building a massive, complicated file-syncing network. Instead, he made a simple three-minute video. It just showed how the product would work, and he posted it on Hacker News.

The reaction was immediate and overwhelming. The beta waitlist skyrocketed from 5,000 to 75,000 people overnight. That simple "smoke test" was all the proof he needed that a huge, painful problem existed and his solution hit the mark.

That one video validated the core business more effectively than millions in seed funding ever could. It confirmed the problem, the solution, and the demand before a single line of complex code was written. That’s the power of lean validation.

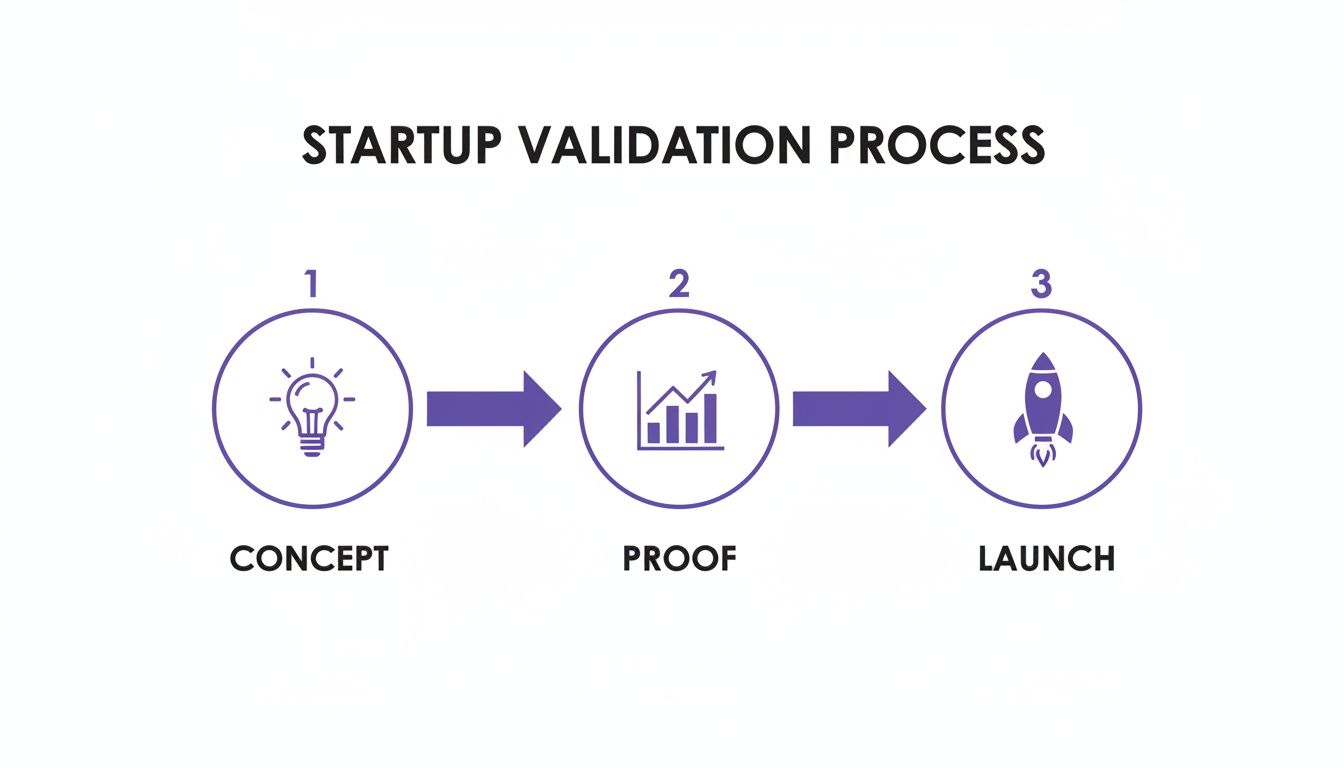

This simple diagram captures the essence of that journey, from concept to launch.

It shows how each stage builds on the last, turning a raw hypothesis into something with real market traction.

To truly understand validation, you have to adopt a mindset of learning, not just building. It means getting out of your office, talking to actual users, and watching their behavior. To keep it simple, I've broken down the process into four core pillars.

This table breaks down the validation process into core stages, giving you a clear mental model to follow throughout this guide.

| Validation Pillar | Core Question | Key Activities |

|---|---|---|

| Problem/Customer | Does a specific group of people have a painful, urgent problem? | Customer interviews, surveys, forum mining, competitor analysis. |

| Solution | Does our proposed solution actually solve that problem for them? | Solution interviews, paper prototypes, mockups, landing pages. |

| Monetization | Are they willing to pay for this solution? How much? | Pricing experiments, pre-orders, "Wizard of Oz" tests. |

| Acquisition | Can we reach and acquire these customers at a sustainable cost? | Paid ad tests, content marketing, manual outreach, viral loops. |

These pillars aren't just steps; they're a continuous loop of building, measuring, and learning that protects you from fatal assumptions. This guide is your playbook for navigating each of these stages, packed with the tools and strategies to test your ideas quickly and affordably.

For a deeper dive into the tactics, exploring how to validate a business idea effectively is a great next step. By committing to this process, you de-risk your entire venture and give yourself a fighting chance to build a business that actually lasts.

Every great product starts here. Before you write a single line of code, design a mockup, or even buy a domain name, you have to do the hard work of validating your core assumption: does anyone actually have the problem you think they do?

This is the whole point of customer discovery. It's less about your brilliant idea and more about becoming an archeologist, digging for evidence of a real, painful problem in your target customer's world.

It’s tempting to skip this part. So many founders are terrified of hearing that their idea isn't needed. But your goal right now isn't to get validation for your solution. It’s to listen. You're searching for the truth about their daily frustrations, their clunky workarounds, and the things they wish they could fix.

A lukewarm "yeah, that sounds neat" is the kiss of death. What you're listening for is the deep sigh, the detailed story about a nightmare workflow, or an exasperated, "I waste so much time doing X!" That's where the gold is.

First things first: you need to find people to talk to. Your friends and family don't count—they love you and are hardwired to be supportive, not critical. You need unbiased feedback from your Ideal Customer Profile (ICP).

The trick is to go where they already hang out.

When you reach out, make it clear you're not selling anything. You're a founder doing research and you value their opinion.

A great problem interview feels more like a therapy session about someone's workflow than a pitch. In fact, you should avoid mentioning your solution entirely. Your job is to shut up and listen.

The most important rule is to ask open-ended questions that get people telling stories.

Crucial Insight: Never, ever ask, "Would you use a product that…?" It's a hypothetical question that gets you feel-good, useless answers. Instead, focus on past behavior. Ask, "Tell me about the last time you struggled with [the problem]." What people have done is a thousand times more telling than what they think they might do.

Your conversation should be structured to uncover their current reality. Start broad to get context about their role, then zoom in on the specific area you're investigating.

Your job is to listen for emotion. Frustration, annoyance, and resignation are powerful signals that you’ve hit a nerve. When you hear it, dig in. A simple "Tell me more about that" can unlock the most valuable insights.

After about 10-15 good conversations, a funny thing will happen. You’ll start hearing the same problems, described with the same language, over and over again. This is pattern recognition, and it’s how you know you're onto something. You're moving from a hunch to a data-backed insight.

Now, organize what you’ve learned. A simple spreadsheet is perfect. Track the key pain points, memorable quotes, and recurring themes from each interview.

This evidence is the foundation for everything that comes next. It allows you to write a sharp problem statement and build a rich customer profile based on real conversations, not guesswork. With this validation in hand, you’re finally ready to start thinking about a solution, confident that you’re building something people will actually care about.

Okay, so you've done the hard work. You've spent countless hours in customer discovery interviews, dug deep into their daily struggles, and you're confident you've found a real, painful problem worth solving.

Now comes the moment of truth. It's time to test your biggest assumption: does your solution actually fix the problem? This is where the Minimum Viable Product (MVP) enters the picture, but probably not how you imagine it. Forget about polished apps and thousands of lines of code. The real goal of an early MVP is to learn as much as you can, as fast as you can, with the least amount of work possible.

Think of an MVP less as a small version of your final product and more as a specific experiment. Each one is designed to answer a critical question about your solution and whether people will actually use it. For founders ready to get into the weeds, this guide on how to build a successful MVP offers a more technical roadmap. But for now, let's stick to the lean, fast, and cheap options that get you real-world data without breaking the bank.

Different ideas demand different experiments. You wouldn't validate a complex B2B SaaS platform the same way you'd test a simple mobile game. Picking the right MVP type is essential for getting clear signals from the market before you go all-in.

Here are a few of my favorite low-cost, high-learning MVP models:

The Landing Page MVP: This is the classic, digital version of Drew Houston's famous Dropbox demo video. You build a single, focused web page that nails your value proposition, highlights the core benefits, and drives visitors to a single call-to-action. Think "Join the Waitlist" or "Get Early Access." Your only goal is to see how many people are interested enough to hand over their email address. It’s a pure demand test.

The "Wizard of Oz" MVP: This is one of the cleverest ways to test a concept. To the user, it looks and feels like a fully automated, slick piece of software. But behind the curtain, you're manually pulling all the levers. Zappos is the legendary example—founder Nick Swinmurn posted photos of shoes from local stores online. When an order came in, he’d literally run to the store, buy the shoes, and ship them himself. He proved people would buy shoes online before he built a single warehouse.

The Concierge MVP: This one is even more hands-on. Instead of faking automation, you are the service. You deliver the entire solution manually for a small, select group of early customers. For instance, if you’re building a personalized meal-planning app, you’d start by personally interviewing your first few clients, creating their meal plans by hand, and emailing the PDFs. The qualitative feedback you get from this is pure gold.

A founder's biggest trap is building too much. We fall in love with our "perfect" vision and start adding features that nobody has asked for. Your first MVP should feel almost embarrassingly simple. If you're not a little bit ashamed of what you're showing people, you've probably waited too long.

This early stage is all about speed and feedback, not perfection.

If you're a non-technical founder, the thought of building even a simple landing page can feel overwhelming. You might think you're stuck until you find a technical co-founder or raise a seed round. This myth kills so many great ideas before they even have a chance.

There’s a much smarter way forward: hire a vetted freelance developer or a small, agile team for a short, tightly-scoped project. This isn't about bringing on a full-time employee. It's about a surgical strike—building the exact thing you need to run your next experiment.

Let's paint a picture:

You want to test a "Wizard of Oz" MVP for a new B2B reporting tool. Your manual process works, but it’s clunky. You need a clean, professional-looking front-end interface to make the experience believable for your first pilot customers.

Hiring an experienced developer for 40-60 hours could be all it takes to build that user-facing dashboard. That's it.

This targeted approach has some huge advantages:

By bringing in specialized talent for a specific task, you can get a functional, testable prototype into the hands of real users and gather the data you desperately need to decide what’s next.

All those customer interviews and workflow deep-dives have paid off. You’ve confirmed the problem is real and painful. But now comes the moment of truth.

It's time to stop talking and start collecting hard evidence. The only question that really matters now is: will people actually pay for what you’re planning to build? This is where we shift from conversations to experiments that measure real-world demand, not just polite interest.

These experiments are designed to put your core value proposition to the test. You're hunting for genuine signals of purchase intent—the kind of commitment that separates a cool idea from a viable business.

One of the quickest and most effective ways to start is with a simple landing page test. Think of it as your digital storefront. The goal is to build a single, focused page that nails your value proposition and pushes visitors toward one specific action.

To make it work, you need a few key ingredients:

The metric you're obsessed with here is your Conversion Rate (CVR)—the percentage of visitors who actually take that desired action. For a B2B waitlist, a 5-10% CVR is a solid benchmark, though this can swing wildly depending on your industry and traffic source.

Want to turn up the heat? Run a smoke test. This is essentially a landing page test with a crucial twist: you include a pricing page or a "Buy Now" button.

When someone clicks, you don't actually process a payment. Instead, you can show a message like, "We're launching soon! Enter your email to be notified and get a 20% discount."

This experiment cuts right through the noise. Clicking a "Buy" button is a much stronger signal of demand than a simple email sign-up. It shows someone was ready to pull out their wallet. Later on, when your MVP is live, you’ll want to explore more accelerated testing strategies to get feedback even faster.

Pro Tip: Don't just watch the final conversion number. Use a tool like Hotjar or Microsoft Clarity to install heatmaps and session recordings. Seeing where people click, scroll, and get stuck gives you priceless clues about what’s working and what's confusing them.

So, how do you get people to these test pages? While you can try organic channels, running small-budget ad campaigns on platforms like LinkedIn, Facebook, or Google is the fastest way to get targeted traffic and data.

Let's say your ideal customer is a "marketing manager at a mid-sized tech company." LinkedIn lets you target that exact profile. You can then test different headlines and value props in your ads to see what resonates most.

Here are the metrics to live and die by:

Choosing the right experiment depends entirely on your goal, budget, and how much evidence you need. Here's a quick comparison to help you decide what's right for your stage.

| Experiment Type | Primary Goal | Typical Cost | Key Metric to Track |

|---|---|---|---|

| Landing Page Test | Gauge initial interest in the value prop. | $50 – $500 | Waitlist Sign-up CVR |

| Smoke Test | Measure actual purchase intent. | $50 – $500 | "Buy Now" or "Pre-order" Click Rate |

| Paid Ad Campaign | Find target audience & test messaging. | $200 – $1,000+ | CTR, CPC, CVR from Ad Traffic |

| Pilot Customer | Validate workflow & willingness to pay. | $0 – $500 (revenue) | Pre-payment or signed contract |

By running these controlled experiments, you shift from guesswork to evidence. You’re no longer just asking people what they think they’ll do; you’re watching what they actually do. This is the hard data you need to decide whether to build, pivot, or go back to the drawing board—before you’ve burned through your budget building something nobody wants.

You’ve run the experiments and you've talked to the people. Now your screen is a chaotic mix of survey results, conversion rates, and scribbled interview notes. This is often the trickiest part of the whole validation process—how do you turn this messy, real-world feedback into a clear "go" or "no-go"?

Let's be real: the data rarely hands you a perfect, clean answer. It gives you clues. Your job is to act like a detective, piecing together the story told by both the hard numbers and the human conversations. This is where you make the tough call to either stick with your current plan, pivot to a new one, or wisely decide to shelve the idea.

The goal isn't just to stare at the metrics. It's to synthesize them. What story emerges when you put your low click-through rate next to a customer quote that says, "I'd never pay for this, but my boss would"? A low conversion rate on its own might feel like a failure, but when paired with feedback like that, it's actually a hugely valuable course correction.

One of the most effective ways to stay objective is to define what success looks like before you even launch an experiment. These are your guardrail metrics—the clear, pre-determined benchmarks that keep you honest and prevent you from moving the goalposts when the results come in.

This simple step is your best defense against confirmation bias. You're forcing your future self to answer the question, "What specific result will give us the confidence to invest more time and money?"

Here’s how this looks in the real world:

These aren't just numbers you pull out of thin air. They should be grounded in reality—informed by industry averages and what you know needs to be true for your business model to actually work.

Once you have something tangible—even a clunky prototype—you need a way to measure if it's truly resonating. The Sean Ellis Test is a famously simple but surprisingly powerful survey for your earliest users.

It’s all built around a single, critical question: "How would you feel if you could no longer use [our product]?" Users get three choices: "Very disappointed," "Somewhat disappointed," or "Not disappointed."

The magic number here is 40%. If 40% or more of your users say they’d be "Very disappointed" to lose your product, that's a massive signal. It means you’ve created something that a core group of people genuinely needs, not just a nice-to-have.

The final decision always comes down to blending the quantitative with the qualitative. Seeing your data visually can make these connections jump off the page. If you're new to this, our guide on data visualization best practices is a great place to start.

Let's imagine you just ran a "smoke test" for a new project management tool. Here's how you'd connect the dots:

| Data Point | The Good News | The Bad News | The Decision |

|---|---|---|---|

| Landing Page CVR | A solid 12% of visitors joined the waitlist. | Only 2% actually clicked the "Pre-order" button. | Interest is high, but the willingness to pay is weak. |

| Ad Campaign CTR | The ad aimed at "creative agencies" got a 3.5% CTR. | The ad for "software developers" only managed 0.4%. | The idea clearly resonates with one niche but falls flat with another. |

| User Feedback | "This would save me hours!" (agency owner) | "Another PM tool? We have three already." (developer) | The quotes confirm what the ad data suggested. |

The Verdict: This isn't a failure—it's a crystal-clear signal to pivot. Your initial idea was too broad. The data is practically screaming at you to forget developers for now and focus entirely on solving this problem for creative agencies.

This entire process shows why proper validation is so critical. An analysis of 2,847 startup ideas found that validated concepts achieve success rates up to 5x higher. They also shrink development timelines from months down to weeks and save founders an average of $50,000 in wasted costs. By making smart, data-driven pivots, founders can avoid common pitfalls and find a path to a fundable business. You can explore the full industry benchmarks and insights to see exactly how this works in practice.

As you dive into validating your idea, you're bound to run into some common hurdles and questions. It’s part of the process. Let’s clear up a few of the most frequent ones I hear from founders.

Forget about hitting a magic number. What you're really listening for are patterns.

The goal is to reach what researchers call thematic saturation. It’s that point where you can almost predict what the next person is going to say about their problems because you’ve heard it so many times. You’re hearing the same pain points, described with similar words, over and over again.

For a well-defined customer segment, this usually starts happening after 10-20 solid interviews. If you're 20 conversations in and still getting completely new, left-field problems, it’s a red flag that your target audience might be too broad.

The real signal isn't a number. It's the moment you can confidently predict your next interviewee's biggest frustrations. That's your cue that you’ve found a real, consistent problem worth solving.

People throw these terms around as if they’re the same thing, but they solve for completely different things. Getting this right is critical.

A prototype is all about usability and design. It’s a low-fidelity (or sometimes high-fidelity) mockup—think clickable Figma designs or even paper sketches. Its job is to answer one simple question: “Can people figure out how to use this?” It’s not meant to be functional, just a way to test the user flow and experience.

An MVP (Minimum Viable Product) is a different beast entirely. It’s the smallest, most basic version of your product that actually works and delivers a core piece of value to a real user. Its purpose is to answer a much bigger question: “Should we even build this thing at all?” An MVP tests market demand and your core business assumptions with real stakes.

You can get started for a lot less than you think. In fact, some of the most powerful validation work costs you nothing but your time.

Of course, when it’s time for a functional MVP, the costs go up. But even then, you don't need to sink your life savings into it. Instead of hiring a full-time team prematurely, a much smarter move is to bring in specialized help for a short-term build. For a complete breakdown of this approach, check out our guide on how to hire developers for a startup to get your MVP built efficiently. It's the best way to get the data you need without the risk of a massive upfront investment.

Let's be blunt: the fastest way to get a world-class remote developer on your team is to use a specialized talent platform. This isn't just about speed; it's about sidestepping the entire traditional recruiting circus. Instead of spending months sifting through résumés and endless interviews, you get direct access to a curated, global pool of […]

The very nature of software engineering is changing. It's moving away from a world of pure, manual coding and toward one of strategic system design. Fueled by AI automation, the rise of cloud-native architecture, and a truly global talent market, the engineer's role is evolving. We're seeing a shift from hands-on builder to high-level architect—someone […]

Stable Coin hired a senior full-stack engineer with a background in mobile development.