Before you write a single line of code, an MVP really starts with one core question: what’s the absolute minimum we can build to see if this idea has legs? It’s all about focusing on your core value proposition and the specific user problem you’re solving, then building just enough to test your assumptions with […]

Before you write a single line of code, an MVP really starts with one core question: what’s the absolute minimum we can build to see if this idea has legs? It’s all about focusing on your core value proposition and the specific user problem you’re solving, then building just enough to test your assumptions with real people. This isn't about launching a feature-rich product; it's about validated learning to make sure you're spending time and money on the right things from day one.

The whole point is to get a working version, however simple, into the hands of early adopters—fast.

Think of building an MVP less as a product launch and more as starting a conversation with your market. It’s a strategic experiment designed to answer a fundamental question: Does anyone actually want this? Getting that answer before sinking a significant investment into development is the real magic of the MVP approach.

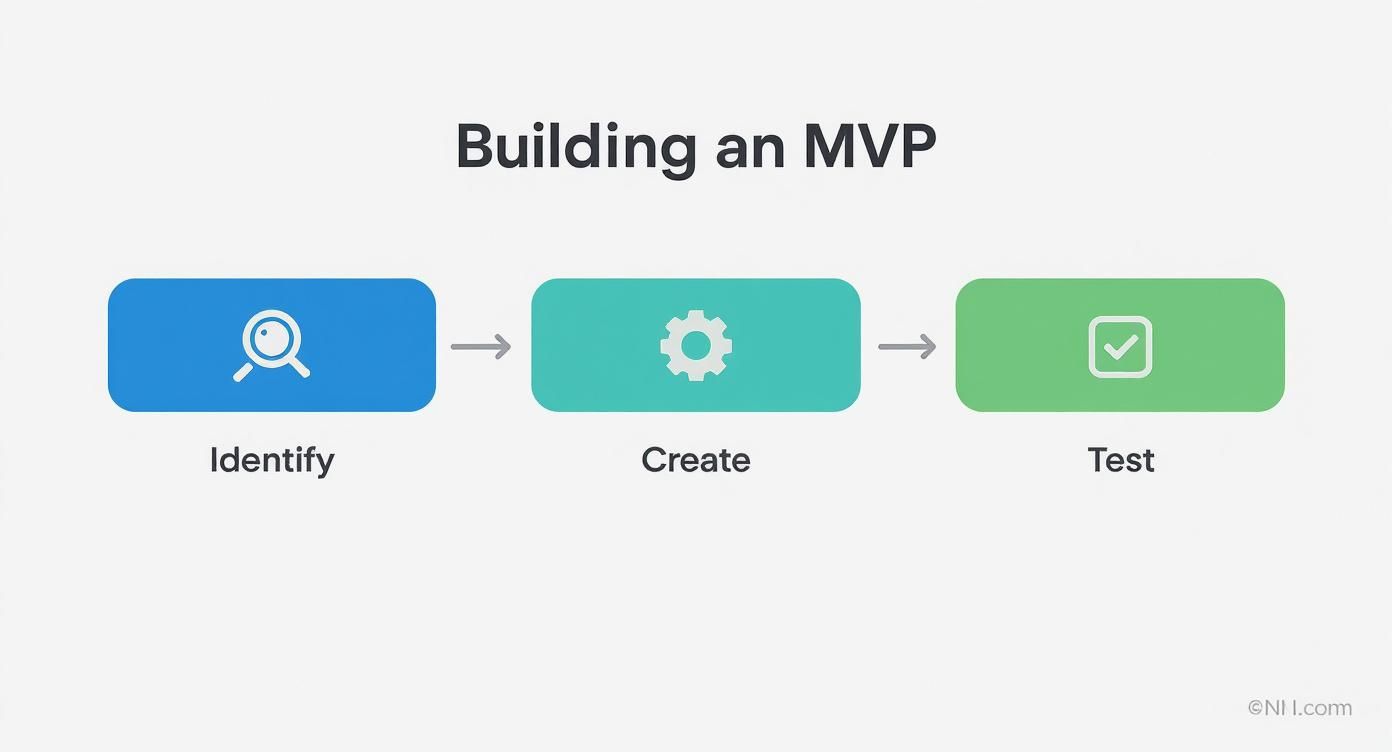

This whole concept is rooted in the Lean Startup methodology, which champions rapid experimentation. It’s a simple loop: you build the smallest possible version of your product, measure how people actually use it, and then learn what to improve, what to change, or even when to walk away.

This feedback loop is crucial because it tackles the single biggest risk a new venture faces. A staggering 34% of startup failures are chalked up to poor product-market fit. That’s why that initial market deep-dive is non-negotiable. You have to get out there and talk to people through customer interviews, run surveys, and do some competitor analysis to find those genuine gaps in the market before you commit to a full-scale build. You can find more insights on mitigating this risk in this startup MVP development guide.

Every great MVP is built around a clear, testable hypothesis. Instead of a vague goal like, "We'll build a platform for local artists," you need something specific. A much better hypothesis would be, "We believe local artists will pay $10 a month for a simple portfolio page that connects them with local buyers." This sharpens your focus onto a single, measurable outcome.

Let's imagine a subscription box service for rare houseplants. Instead of building a full-blown e-commerce site with complex logistics and inventory management, the MVP could just be a simple landing page.

An MVP isn't a cheaper product; it's a smarter way to build the right product. It forces you to make tough calls about what truly delivers value, saving you from wasting months building features nobody will ever use.

You don't need a huge budget for this. Some quick, lightweight tactics can give you a ton of insight. Start by figuring out where your target audience hangs out online—maybe it's specific Reddit communities, Facebook groups, or niche forums.

Lightweight Competitor Audit

Forget a deep, exhaustive analysis. Just do a quick audit of 3-5 direct or indirect competitors. Zero in on a few key things:

Getting this initial groundwork done sets the stage for everything that follows. When you start with a sharp hypothesis and a clear picture of the landscape, you can define a lean feature set that actually solves a real-world problem.

To pull this all together, here’s a look at the core principles that should guide your MVP development from the get-go.

This table breaks down the fundamental ideas that should guide your strategy, from the initial spark of an idea to getting real-world validation.

| Principle | Description | Key Action |

|---|---|---|

| Focus on Core Value | The MVP must solve at least one primary problem for its target users. | Identify the single most important user pain point to address. |

| Minimize Features | Include only the essential features needed to deliver the core value. | Ruthlessly prioritize and cut anything that is a "nice-to-have." |

| Launch Quickly | The goal is to get the product into real users' hands as fast as possible. | Set a strict timeline (e.g., 2-4 months) and stick to it. |

| Gather Feedback | The primary purpose of an MVP is to learn from user interaction and data. | Implement basic analytics and channels for direct user feedback. |

| Iterate and Evolve | Use the collected feedback to inform the next development cycle. | Create a roadmap for improvements based on validated learning. |

Sticking to these principles keeps your team aligned and ensures that every decision you make is focused on the ultimate goal: building a product that people will actually pay for and use.

So, you’ve validated your idea. You know there’s a real problem out there that needs solving. The immediate temptation is to dive in and build a solution that does everything for everyone. Resist that urge. This is where most early-stage products go off the rails, a victim of the dreaded scope creep.

Knowing what to build—and, more importantly, what not to build—is what separates a successful MVP from an expensive, bloated prototype that never sees the light of day. The goal isn't to ship a watered-down version of your grand vision. It's about delivering a concentrated dose of value that solves your user's single most pressing problem, and does it well. This requires ruthless prioritization.

Think of it as a continuous learning cycle. You identify a problem, build just enough to solve it, and get it in front of real users. Their feedback then loops directly back into what you build next, preventing wasted effort and ensuring you stay aligned with what the market actually wants.

Gut instinct is great, but when you're deciding what to spend precious time and money on, you need something more objective. This is where prioritization frameworks come in handy. They force you to justify every feature’s existence, moving the conversation from "what can we build?" to "what must we build to learn?"

Two of the most practical methods I've used are the MoSCoW method and the impact-versus-effort matrix.

The MoSCoW method is a straightforward way to bucket your feature ideas:

Another indispensable tool is the impact-versus-effort matrix. It’s a simple 2×2 grid that helps you visualize where to focus your energy for the biggest return.

You just plot each feature on the grid based on two simple questions:

Your MVP should be built almost exclusively from the high-impact, low-effort quadrant. These are your quick wins—features that deliver a ton of value for minimal development cost. High-impact, high-effort features are your big, strategic bets for later on. Anything with low impact should be shelved, probably forever.

Your MVP's feature set is not a commitment for life; it's a starting point for a conversation with your users. The goal is to build just enough to learn what they truly value, not to deliver a perfect, all-encompassing solution.

The data backs this up. Companies that launch with an MVP are 62% more likely to succeed. And while 85% of product managers see MVPs as essential, a worrying 35% of companies admit they still add features just to close sales deals—a trap that a disciplined MVP process helps you sidestep.

Finally, every single feature that makes the cut must be directly tied to a user need. This is where user stories and journey maps come in. A user story is a simple but powerful tool for framing work from the user's point of view: "As a [type of user], I want [an action] so that [a benefit]."

For instance, if you're building a lean analytics dashboard:

That small shift gives the "why" behind the work, which leads to smarter product decisions. To make sure your priorities are data-driven, you can use feature prioritization survey templates to get direct input from your target audience.

A user journey map helps you visualize the entire experience someone has with your product, from the moment they hear about it to when they achieve their goal. By mapping this out, you can pinpoint the most critical moments—the touchpoints where your MVP needs to shine—and ensure your features connect in a logical way to solve a complete problem from start to finish.

Once your idea is validated and your scope is defined, it's time to make two of the most critical decisions you'll face: what technology to use and who will build it. These choices will directly impact your speed, budget, and ability to scale later on.

The "best" tech stack is the one that gets your MVP in front of users the fastest without boxing you in. Don't over-engineer it.

No-code platforms like Bubble are fantastic for getting a simple prototype or landing page up in days, not weeks. However, they can hit a wall when you need custom logic or have to handle a lot of traffic. On the other end of the spectrum, full-stack frameworks like React and Node.js give you complete control and scalability, but they come with a steeper learning curve and a longer development timeline.

This is where feature prioritization methods, like MoSCoW, come in handy. By focusing only on the "Must-Have" features, you can make a much more realistic tech decision.

As the visual shows, your MVP is built on that "Must-Have" foundation. Everything else can wait. With that in mind, many startups find a sweet spot with a hybrid approach, letting them launch an initial version in just two weeks while leaving the door open for more complex features later.

To help you decide, here’s a quick breakdown of the common paths founders take. Think about where your MVP fits in terms of complexity, budget, and long-term goals.

| Technology Type | Pros | Cons | Best For |

|---|---|---|---|

| No-Code | Extremely fast setup; very low cost | Limited custom logic; poor scalability | Simple landing pages, basic workflow validation, internal-only tools. |

| Low-Code | Faster than full-code; some customization | Vendor lock-in; can have high licensing fees | Rapid interactive prototypes, more complex internal applications. |

| React + Node.js | Full control; highly scalable | Longer setup; requires skilled developers | Customer-facing apps, products expected to handle high traffic. |

| Hybrid | Balances speed with future flexibility | Can add integration complexity | Fintech apps with secure backends, products launching in phases. |

Ultimately, the goal is to choose the simplest stack that can deliver your core value proposition. You can always rebuild or migrate later once you have traction and funding.

You don't need a huge team for an MVP. What you need are the right roles filled by people who can wear multiple hats and move quickly. Overstaffing is a classic startup mistake that burns cash with no real return.

Here’s a look at the essential players for a lean MVP build:

"Hiring pre-vetted remote talent cut our MVP timeline by 30%, accelerating our launch."

Finding the right people is tough. When vetting remote developers, skip the brain teasers and focus on practical skills.

If you need to assemble a team quickly, you might want to explore your options for outsourcing custom software development.

With a remote team, clear communication is non-negotiable. It’s what keeps the project from derailing.

So, what does a team like this cost? It varies wildly by location. A developer in Eastern Europe might average $35/hour, while a U.S.-based developer can easily be $100/hour or more.

Let’s run some numbers. A lean team of four (PM, designer, two developers) working for six weeks at 40 hours/week could cost around $34,400, assuming a blended rate.

You can adjust the knobs to fit your budget:

Once you’ve got your scope, tech stack, and team sorted, the real fun begins. You're now in the engine room of MVP development: a fast, continuous cycle of building, testing, and learning. This isn't a long, drawn-out process where you disappear for months and then emerge with a "finished" product. Instead, it’s a series of short, focused sprints designed to turn your assumptions into hard facts as quickly as possible.

The whole MVP approach is built on these feedback loops. It’s a philosophy that Eric Ries popularized in "The Lean Startup," and it's a huge reason why the global MVP development market is expected to jump from USD 288 million to USD 541 million. The model is simple: learn from what real people do with your product, rather than guessing in isolation. You can see more on the expanding MVP market and its methodologies.

This iterative process is what stops you from sinking six months of work into something nobody actually wants. You build a tiny piece of functionality, get it in front of users, and find out right away if you're heading in the right direction.

Your development should be broken down into sprints—short, time-boxed periods, usually lasting one or two weeks. Each sprint needs a clear, achievable goal. The point isn't to cram in as many features as you can, but to deliver a single, testable piece of value.

For instance, your first sprint goal might be as simple as: "Implement user sign-up and login via email." The next one could be: "Allow users to create a basic profile." This sharp focus keeps the team on track and makes your progress easy to measure.

This is where project management tools like Jira or Trello become invaluable. They help you see your workflow, track every task, and make sure everyone is on the same page. Honestly, a simple Kanban board with "To Do," "In Progress," and "Done" columns is often all you need to keep things moving. The idea is to stay lightweight and avoid getting bogged down in administrative fluff. If you want to go deeper, understanding the specific roles in agile software development is key to making these sprints hum.

Before a single line of code reaches a real user, it absolutely has to be tested internally. This is your first line of defense against embarrassing bugs and a clunky user experience. Even when you're in a rush to launch, do not skip this.

Put together a straightforward internal QA checklist that covers the core functionality you just built.

Example QA Checklist for a "Create Profile" Feature:

This kind of focused, internal testing can save you from major headaches. I once worked on an app where a quick usability test with just five team members revealed our onboarding was so confusing that three out of five couldn't even finish it. Finding that out before launch saved us from a total disaster.

The goal of an MVP isn't to be bug-free, but it must be stable enough to deliver on its core promise. A few rough edges are acceptable; a product that constantly crashes is not.

Once your feature is live with a small group of early users, the learning really kicks into high gear. You'll be gathering two critical types of feedback: qualitative and quantitative.

Quantitative data is the "what." This is the raw data from your analytics tools that tells you what users are doing.

Qualitative data is the "why." This comes from actually talking to your users through interviews, surveys, and feedback forms. It explains why they behave a certain way.

With all this feedback in hand, the final step is to translate it into concrete tasks for the next sprint. Every piece of user feedback—whether it's a bug report or a feature idea—should be logged and prioritized. This raw input becomes the fuel for your product roadmap, ensuring every development cycle is guided by what users truly need, not just what you think they need.

Getting your MVP into the hands of real users isn’t the finish line—it’s the starting gun. This is where the real learning begins. All the assumptions you've made are about to be tested, and how users actually behave will dictate your next move.

Your initial launch strategy really sets the tone for this whole process. You don't need a huge, splashy public release from day one. In fact, for most startups, a more controlled, deliberate approach is much smarter.

You've got two main ways to go about this:

For most teams just starting out, the soft launch is the way to go. It lets you build a real connection with your first users and fix the most glaring problems before they can damage your reputation.

You can't learn from what you don't measure. Before a single person signs up, your analytics have to be ready to go. Thankfully, getting the basics in place is quick and will give you the data you need to make smart calls instead of just guessing.

Tools like Google Analytics are a good start for seeing where your traffic comes from. But for an MVP, you need to go deeper. Event-based tools like Mixpanel or Amplitude are designed for this. They let you track specific actions people take, like "Created First Project" or "Invited a Friend."

Don’t drown yourself in data. To start, just track the 3-5 key metrics that prove users are actually getting value from your core feature. Everything else is just noise right now.

Focus on the numbers that tell a story about how engaged people are, not just how many showed up.

It’s incredibly tempting to get excited about 10,000 page views or 1,000 new sign-ups. But these are vanity metrics. They feel good, but they tell you absolutely nothing about the health of your product or whether you have a viable business.

You need to track actionable metrics that reflect real user value.

Here are the essentials for your MVP dashboard:

These metrics give you a clear, unfiltered look at how your MVP is really doing. For a more detailed breakdown, our guide on choosing the right KPIs for software development can help you build out a solid tracking plan.

A simple dashboard—even just in a Google Sheet—that displays this data live keeps the whole team focused and honest. With this information in hand, you'll know exactly what to do next: pivot, persevere, or prepare to scale.

Building an MVP tends to raise the same critical topics: cost, timing, and terminology. Founders and product teams need clear, experience-driven answers to steer their projects in the right direction. Below, you’ll find concise insights drawn from real-world MVP launches.

These explanations skip the fluff and give you the confidence to make strategic choices at every stage.

Budgets for an MVP can swing dramatically. I’ve seen basic no-code versions come together for $5,000, while enterprise-grade apps with custom integrations easily exceed $100,000.

Key cost drivers to watch:

In one fintech project, we trimmed features to the absolute essentials and slashed the budget by 40%. The lesson: spend just enough to validate your core hypothesis before adding bells and whistles.

MVP and prototype aren’t interchangeable. They serve distinct goals and communicate very different things to stakeholders.

A prototype answers, “Can users understand this?”

An MVP answers, “Will users actually use this?”

Chasing perfection can stall your momentum. Instead, look for these three indicators:

In one e-commerce test, we launched with just checkout and inventory alerts. The feedback was so positive that adding wish lists became our top priority for the next sprint.

Launch is not the finish line—it’s the start of learning.

Careers in cryptography are high-stakes, high-reward. These are the people who build and break the digital codes that secure our world, protecting everything from your bank account to global decentralized networks. It's a field with massive growth and serious six-figure salary potential. Why Cryptography Careers Are Exploding Welcome to the new frontier of digital trust. […]

The demand for remote machine learning jobs isn't just a trend anymore; it's a fundamental shift in how AI talent is hired and deployed. For ML professionals, this opens up a world of possibilities, not just in terms of flexibility, but also significant financial upside. Companies are in a fierce competition for top talent, and […]

The software engineering field is constantly shifting, with certain specializations commanding unprecedented salaries. But which roles truly offer the highest earning potential, and what does it take to secure one? This data-driven guide breaks down the best paying software engineering jobs, moving beyond vague titles to offer a detailed roadmap for career advancement and strategic […]